Automating Microservices on AWS

With the growing need for managing and monitoring microservices on the cloud, it becomes imperative to enforce standardizations across the board.

Organizations are rapidly moving towards cloud adoption. They

are also looking at microservices and an agile way of development, deployment,

and testing. With such rapid adoption, large organizations are facing many

challenges. In large organizations, generally, there are multiple projects

running simultaneously. All these projects would typically have a large number

of microservices. This results in hundreds of microservices being under

development and a number of individuals and teams with varying degrees of

skills, expertise, and experience working on those.

The first thing that companies complain about is the lack of

proper tagging. With so many microservices being developed, it becomes

impossible to trace them without proper tagging. Allocating costs to different

projects is also done with the help of tags. Project names, application names,

and environment names like DEV, QA, etc., among others, go a long way in

managing resources on the cloud better.

Another issue that frequently comes up in discussions is the

lack of standardization. For example, teams often don’t follow standard naming

procedures. They might include project names or application names while naming

resources, but these are generally not standard across all projects in an

organization. Someone would prefix the project name to their resources, while

another individual or team might postfix the project name. Then there’s the

problem of code libraries. Teams typically tend to use different versions of

JDK. Also, if spring-boot is being used, the version is definitely not the same

for all current systems under development. This poses a huge challenge in

managing and monitoring resources on the cloud and providing ongoing support.

Developers are usually protective about their code and tend to

go easy with unit testing. But the worth of unit testing cannot be undermined

in any way. A well-constructed unit testing suite goes a

long way in eliminating the risk of potential regression defects. So, there

must be a way to enforce unit testing and measure coverage.

The other questions are about automation. Can we automate parts

of the development? Also, what is the best way to build and deploy my services?

It generally takes a considerable amount of time to build the foundation,

develop deployment scripts, and create CI/CD pipelines.

Developing a utility tool that would create microservices and

take care of the deployment is an excellent solution to the above challenges.

This can be used as a command line tool or can be uploaded and distributed

through AWS Service Catalog. The next section outlines a reference architecture

and the steps for creating and using such a tool.

Solution

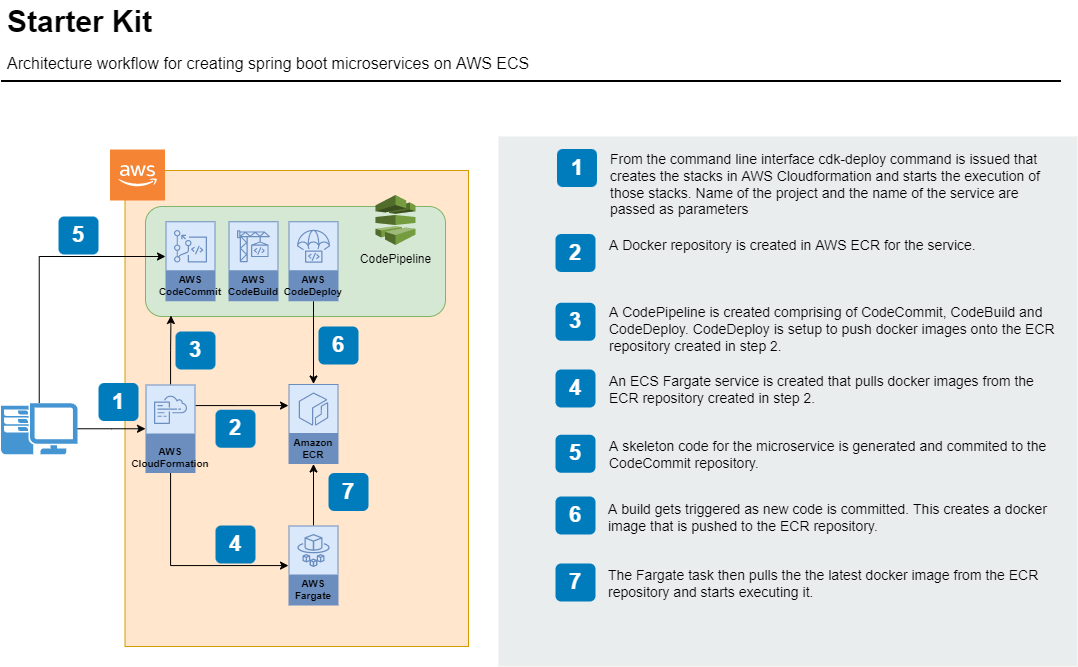

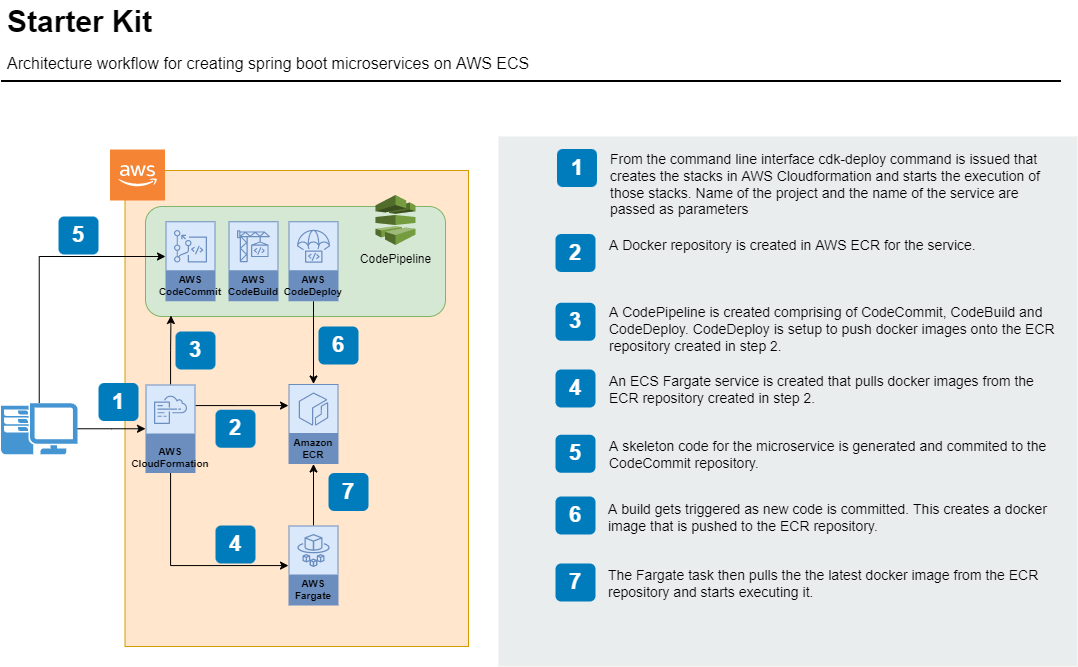

The utility tool is a one-click solution that would generate

the structure

of a microservice, create all necessary resources on AWS along with a CI/CD

pipeline, commit the skeleton code and deploy onto ECS post a successful build.

It’s a command line tool that takes the project, application, and service names

as input and auto-generates everything else. The following diagram outlines the

architecture and the event flow of the system.

The solution has two parts to it. The first part of the solution

is generating the skeleton spring-boot project. For this part, the maven

archetype is used. It generates the structure of the project, complete with

proper method signatures along with request and response classes. It also

generates associated unit test classes. The generated code results in a clean

build. Going forward, failure of unit tests or a low coverage will result in a

failed build.

The second part is to create the required AWS resources. For

this, AWS Cloud Development Kit (CDK) is leveraged. CDK is an infrastructure as code (IAC)

offering from AWS. It helps to define infrastructure using code and then

provision them through Cloudformation stacks. Here CDK is used to create a

CodePipeline comprising of CodeCommit, Codebuild, and CodeDeploy, an Elastic

Container Repository (ECR), and an Elastic Container Service (ECS) with Fargate

provisioning. All resources that are created follow the same standards. They

are properly tagged with values for the project name, application name, etc.

Once the tool executes successfully, the code is available in

the code repository, an initial build has been done, and the corresponding

docker image has been pushed into ECR. The image is deployed onto an ECS

Fargate instance and can be accessed from external or internal clients.

Developers can now go in and start putting business logic in the skeleton code

without being concerned about anything else.

Benefits

- Achieve

standardization across projects in terms of naming, versioning, etc. This

increases the observability of the system. With standard nomenclature,

monitoring becomes simple. Also, it's easy to search for resources in

logs.

- Proper

tags are put in place for all resources created in AWS. This helps in

categorizing resources and generating costs for projects or

accounts.

-

Build

and deployment are automated from the beginning. This ensures proper

DevOps practices.

Also, it opens up scope for enforcing best practices like a low unit test

coverage would fail a build.

- The

structure of the project is generated, enabling developers to focus on

business logic. Developers generally don't like to work on boilerplate

code. Generating the structure and removing the need to write boilerplate

code makes things exciting for developers, thereby increasing

productivity.

- It’s

a one-click solution that ensures that services are up and running within

minutes. This drastically reduces the time to start developing

microservices on AWS.

- Reduces

development and testing cycles for services. The structure is already

created by the tool, and it also enforces strict unit testing coverage.

This results in high code quality with minimal effort.

With the growing need for managing and monitoring microservices

on the cloud, it becomes imperative for organizations to enforce

standardizations across the board. Also, some teams are familiar with

microservices development but not so familiar with cloud technologies. There is

thus a constant lookout for tools and techniques that would get teams up and

running with microservices development in the minimum possible time. This is a

powerful utility tool with huge benefits. CDK is a great tool that facilitates

AWS native development. The starter kit has abundant potential and can be

extended to other development scenarios. For example, this can be used to

create Lambdas in a similar way. This can also be used to build the entire

network, including VPCs, subnets, and associated routes.

We Provide consulting, implementation, and management services

on DevOps, DevSecOps, Cloud, Automated Ops, Microservices, Infrastructure, and

Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist: https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

If this seems interesting, please email us at [email protected] for a call.

Relevant Blogs:

Best Practices for Building a Cloud-Native

Google Cloud - For AWS Professionals A Service Mesh for Kubernetes

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post