Challenge Your Cybersecurity Systems With AI Controls in Your Hand

Is your organization's data immune to nefarious acts of cyber criminals? Else, be ready to bear the brunt of a weak defence system!

Since the inception of the internet, cyber security has been one of the prime points to ponder. Protecting computers, mobile devices, electronic systems, servers, networks, and data from nefarious attacks from cyber miscreants. Today, AI has become the epicenter of all operations, and to remain relevant, one cannot ignore the usage of AI. AI not only brings ease of operations in the lives of human professionals but also brings numerous threats. One of the most dangerous aspects of AI is the ethical usage of data. This article will give you a broad understanding of everything you need to know about safeguarding your AI systems, including your invaluable data. Let’s get started!

For one of the longest periods of time, most organizations have been following the BAS technique to mitigate security risks.

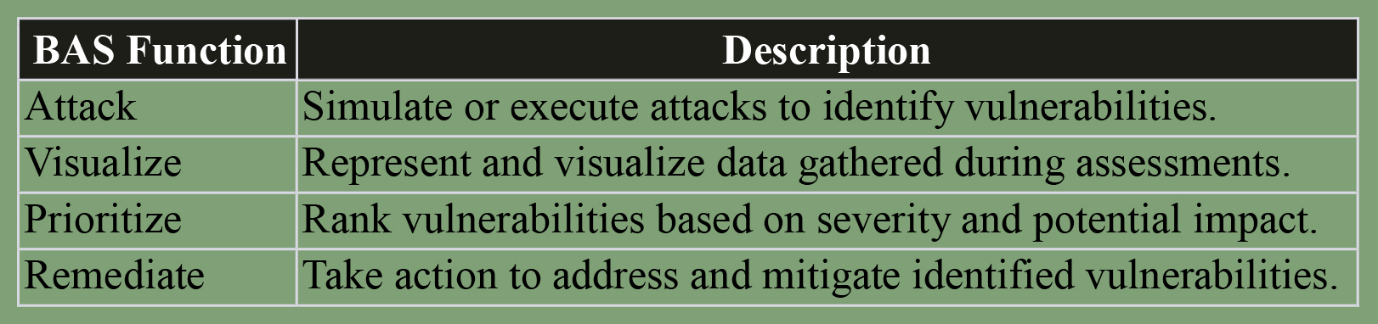

The BAS function includes:

Mitigating cyber threats involves the following:

- Constant simulations (manual and automated)

- Cyber kill chain

- Understand how reliable current security systems are

- Comprehend current risks and probable risks in the near future

- Finding remedy

- Risk findings and priority of solving

As an organization, checking the effectiveness of cyber security and vulnerability management is of paramount importance. Starting from speed to laying policies in place and conducting regular procedural checks to remediate and deal with security threats requires unwavering effort. Here are four most common and significant enterprise threat management practices:

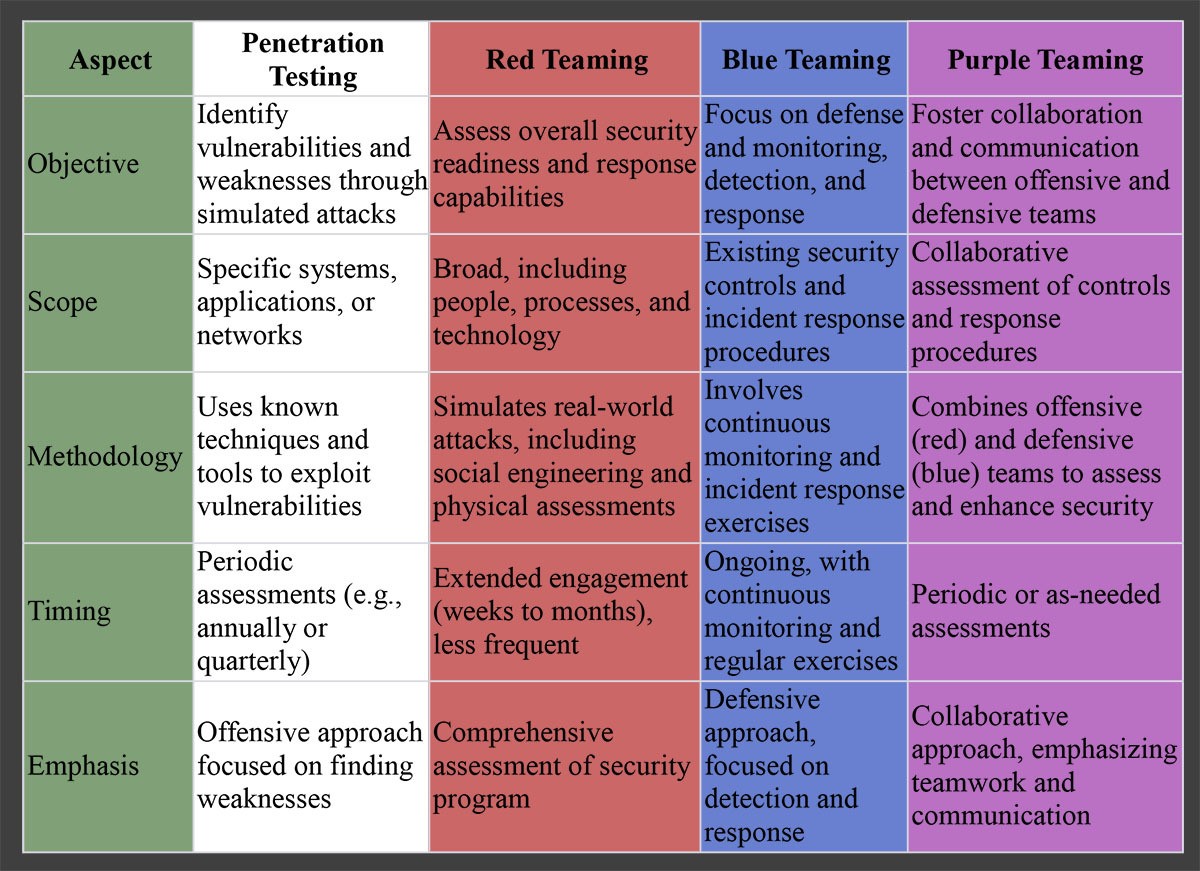

- Penetration Testing is one of the most practiced approaches that companies deploy to identify infrastructural vulnerabilities. It covers endpoint devices, applications, and networks. Highly skilled security professionals utilize tools to attack already present devices, networks, and applications to find vulnerabilities and thereafter try to mitigate the risks.

- Red Teaming is more like ‘ethical hacking.’ It’s an advanced form of threat control where already present organizational defense mechanisms are put to the test by hackers (employed by the organization). Expert hackers voluntarily try their best to breach the company’s security and detect the required improvements in security controls. This helps gain an external perspective with no bias.

- Blue Teaming is more of a standard protocol to follow. An internal security team of the organization makes the best move to defend the organization’s security system. This is not a foolproof security system as it often becomes a challenge for internal members to identify probable threats, which eventually makes room for bias.

- Purple teaming acts like a monitor to check the red and blue team in place. They gain insights from both the teams and study to conduct advanced persistent (APT) threat experiences to enhance overall security.

Usage of AI Calls for Tough Troubles

While we develop AI models and when we use AI tools to create content, conduct analysis, or garner insights, we are knowingly providing the machine learning system with all our sensitive data. An organization is as good as its data as it is its master weapon to win clients and perform tasks. There are numerous ways in which your organization’s data can be adulterated, doctored, or mistreated.

- Model distortion: AI tools are often manipulated in their learning process. People with adverse intentions can use training data that is full of bias, ensuring AI models learn incorrectly, leading to faulty outputs.

- Data breach: Building AI models requires humongous amounts of data. The data is available at the tip of more than required human hands. This data, if not secured, can be used to create nefarious actions. For example, the use of personal information for blackmailing.

- Data fiddling: Fiddling with data is a severe threat that can be used to misinterpret instructions. For example, if training data is tampered with, then AI models will read the wrong traffic signals, leading to accidents.

- Insider information: Yes, this goes much more than stocks or finance. Stealing an organization’s data to cause disruptions or harmful actions can break down a company.

Every AI model development company should follow the General Data Protection Regulation (GDPR) and the European Union, and the California Consumer Privacy Act (CCPA) for safe data collection, storage, and usage processes.

Securing the Artificial Intelligence System: Best Is To Take the Help of Experts

With the mushrooming of AI tools and the unparalleled utilization of AI in almost all industries and sectors, there has been rising concern with respect to data security. The growth of artificial intelligence is impossible to ignore. Big companies and large groups such as Facebook, Twitter, Google, Open AI, and Microsoft are on their toes to ensure no data breaches occur. In fact, Open AI has opened its gateway for adept professionals interested in joining and contributing as red teams.

On the other hand, Microsoft has proclaimed their shrewdness — "on Monday, Microsoft is revealing details about the team within the company that since 2018 has been tasked with figuring out how to attack AI platforms to reveal their weaknesses." Check out this article for more information.

There is no doubt about the fact that AI systems get exposed to a large amount of sensitive or personal data. No longer is it solely the responsibility of the IT or GRC team to safeguard company assets. The onus lies in the hands of all — marketing, HR, finance, admin, operations, sales! Every AI initiative should be treated with caution without overlooking the significance of data integrity.

However, the problem is in time management and forte. This is the major reason why companies with a high level of maturity in information security processes opt to outsource their data security tasks to an able provider. This will give the other departments, like marketing, HR, and finance, the freedom to concentrate on what they do best.

Outsourcing Threat Testing Services: Choice of the Wise

Organizations may take charge and conduct security threat-mitigating practices internally, but they will encounter countless challenges. Conducting regular security threat practices is a highly manual task that involves a lot of resource costs. Also, the availability of skilled in-house resources to conduct security checks is often questionable. Once the tests are conducted, only expert security professionals will be able to understand the loopholes in the security system. Also, understanding and performing the process by the book to mitigate the identified security threats is not a cup of tea. Therefore, it is always wise to take the help of AI security experts who will evaluate threats, protect critical assets, and respond in real time.

We Provide consulting, implementation, and management services on DevOps, DevSecOps, DataOps, Cloud, Automated Ops, Microservices, Infrastructure, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist: https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

If this seems interesting, please email us at [email protected] for a call.

Relevant Blogs:

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post