Service Mesh 102: Envoy Configuration

Service Mesh 102: Envoy Configuration

In our Service Mesh 101 article, I talked about some of the basics behind a service mesh: what it is, what it does and where Envoy fits into a service mesh. Having now covered those basics, I’d like to dig into some more in-depth content focused on the basics of Envoy configuration in a service mesh.

Recall from the previous article that several different services mesh use Envoy. Istio is an example of a service mesh that leverages Envoy for its data planes. Kong’s open-source project Kuma—and its enterprise counterpart Kong Mesh—also use Envoy for the data planes.

Key Parts of Envoy’s Configuration

With all of the functionality that Envoy supports—things like dynamic configuration, multiple load balancing algorithms, expansive protocol support, retries, circuit breaking and rate limiting—sometimes an Envoy configuration can be complex. The easiest way to approach Envoy’s configuration is to break it down into the core components.

It starts with a listener

The listener is simply a way for an Envoy to receive traffic. The listener is associated with an IP address and a port. That IP address might be all zeros, meaning traffic to any IP address on this port gets captured by the listener and then passes through the rest of Envoy to get to its final destination.

Every listener also has an associated set of filters. Filters are like plugins for Envoy. They determine how Envoy does what it’s going to do with traffic. Depending on the filter in use, Envoy may act as a layer 4 proxy (without any application awareness/knowledge) or act as a layer 7 proxy (with application/protocol awareness).

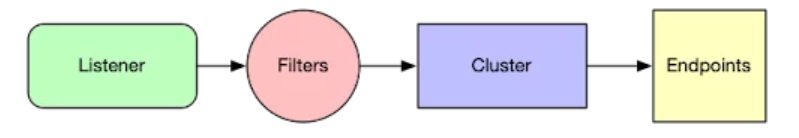

The below diagram represents using a TCP proxy filter where Envoy acts as a Layer 4 proxy. It doesn’t have any higher-level application knowledge about what kind of traffic it is or what the traffic is doing.

Figure 1: A listener with the TCP Proxy filter

When acting as a layer 4 proxy—in other words, just proxying TCP or UDP traffic without decoding the upper-level application protocols—traffic passing through the filters is next directed to a cluster.

A cluster corresponds to a destination for the traffic Envoy has received.

However, that destination may contain multiple systems—multiple virtual machines (VMs) or multiple containers—and Envoy needs to load balance traffic to the systems within the cluster. It does this by maintaining a list of endpoints within every cluster. Every cluster has one or more endpoints (a cluster has a single endpoint, or a cluster might have dozens of endpoints). The cluster also has the load balancing configuration that instructs Envoy on exactly how to load balance to the endpoints within the cluster.

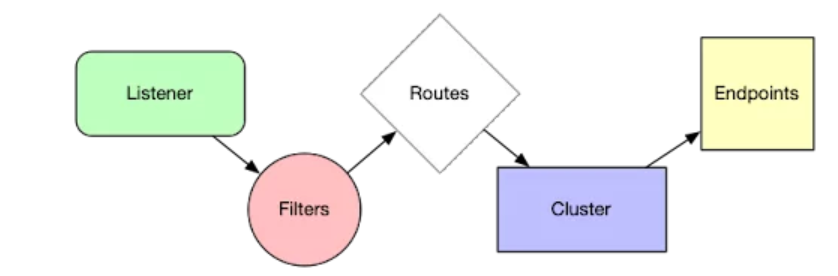

If Envoy acts as a layer 7 proxy—where it decodes the higher-level application protocols like HTTP/1.1 or HTTP/2—then the flow looks slightly different. In the case of HTTP traffic, an additional component emerges: the route.

Figure 2: Components when proxying HTTP traffic

In this case, Envoy has some of that higher-level application knowledge. Because Envoy is decoding the HTTP traffic, Envoy knows about things like the requested host, request headers, path and submitted query parameters. It’s able to make decisions based on this information. This is where routes come in—they define the decisions Envoy will make when directing traffic for different hostnames or different paths. In other words, Envoy can direct traffic to a cluster based on the requested hostname or the URL path.

To sum up, the general traffic flow looks like this:

1. First,

one or more filter(s) manipulate the traffic that comes in through a listener.

2. If the filter(s) include application-level

filters, Envoy will decode the protocols to decide on the destination cluster.

In the case of HTTP-based traffic, it’ll look at a series of routes to

determine where it needs to go (which cluster should receive the traffic).

3. Traffic is directed to an endpoint within a

cluster. If multiple endpoints exist, the traffic is load balancing according

to the cluster’s load balancing configuration.

Dynamically Configuring Envoy via APIs

Where does Envoy get all of this information? Where does it find out about listeners and routes and clusters and endpoints? All of this is going to come from the service mesh’s control plane. In the previous article, I explained that the control plane’s role is to integrate with other systems, such as Kubernetes, to gather information about the services available on the mesh.

As influenced by policies that affect traffic routing, or rate limiting or some other functionality, this information gathered by the control plane must be translated into things that Envoy understands: listeners, filters, routes, clusters and endpoints. After it is translated into Envoy’s “language,” so to speak, it must be passed down to the individual data planes—the instances of Envoy—that sit in front of the workloads. The control plane does that through the APIs that Envoy exposes called the xDS APIs.

The DS stands for “Discovery Service.” The x in the xDS is like a variable. Think of it like “x Discovery Service,” where listener, cluster, route or endpoint (among a number of other options) replaces x. In fact, every major component of Envoy and every major configuration option has a corresponding discovery service in the xDS APIs.

There’s also the Aggregated Discovery Service (ADS), which allows a control plane to kind of pass all of this information down in one big stream. You can think of the ADS as the big firehose. Envoy gets all its configuration across one stream rather than getting little bits and pieces from different areas.

Conclusion

I hope you found this overview of Envoy configuration in a service mesh helpful!

ZippyOPS Provide consulting, implementation, and management services on DevOps, DevSecOps, Cloud, Automated Ops, Microservices, Infrastructure, and Security

Services offered by us: https://www.zippyops.com/services

Our Products: https://www.zippyops.com/products

Our Solutions: https://www.zippyops.com/solutions

For Demo, videos check out YouTube Playlist:

https://www.youtube.com/watch?v=4FYvPooN_Tg&list=PLCJ3JpanNyCfXlHahZhYgJH9-rV6ouPro

If this seems interesting, please email us at [email protected] for a call.

Relevant Blogs:

Multi tier application in kubernetes

Adding load balancer to kubernetes

Recent Comments

No comments

Leave a Comment

We will be happy to hear what you think about this post